Welcome to the International Planning Competition 2023: Probabilistic and Reinforcement Learning Track

The International Probabilistic Planning Competition is organized in the context of the International Conference on Planning and Scheduling (ICAPS). It empirically evaluates state-of-the-art planning systems on a number of benchmark problems. The goals of the IPC are to promote planning research, highlight challenges in the planning community and provide new and interesting problems as benchmarks for future research.

Since 2004, probabilistic tracks have been part of the IPC under different names (as the International Probabilistic Planning competition or as part of the uncertainty tracks). After 2004, 2006, 2008, 2011, 2014, and 2018, the 7th IPPC will be held in 2023 and conclude together with ICAPS, in July 2023, in Prague (Czech Republic). This time it is organized by Ayal Taitler and Scott Sanner.

Please forward the following calls to all interested parties:

We invite interested competitors to join the competition discussion:

ippc2023-rddl@googlegroups.com

| Event | Date |

|---|---|

| Infrastructure release with sample domains | October, 2022 |

| Call for domains and praticipants | October, 2022 |

| Final domains announcement | March 2, 2023 |

| Competitors registration deadline | March 15, 2023 |

| Dry-run | April 17, 2023 |

| Planner abstract dubmission | May 1, 2023 |

| Contest run | June 5-8, 2023 |

| Final planner abstract dubmission | June 13, 2023 |

| Results announced | July 11, 2023 |

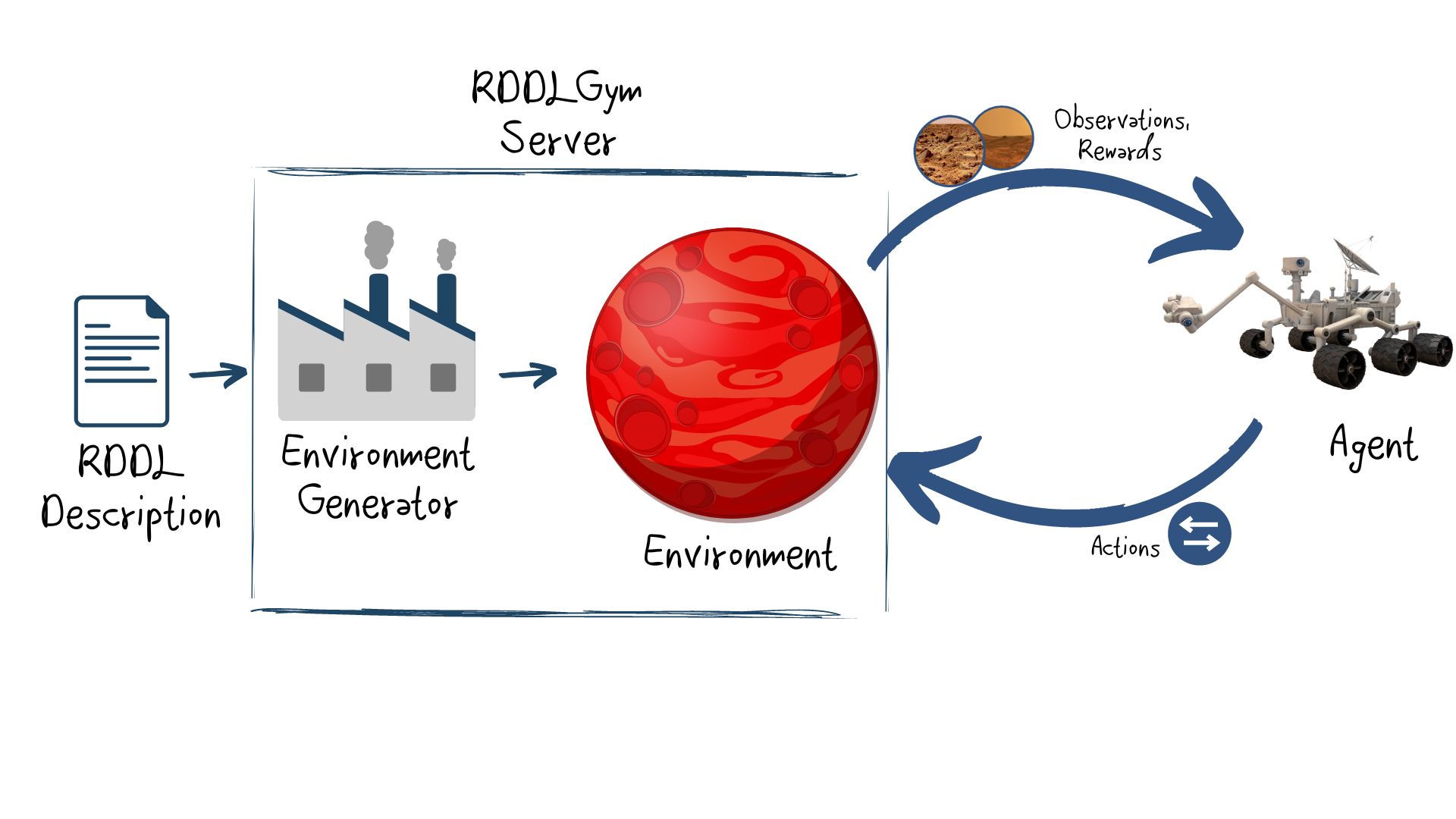

This year’s competition will be using the generic pyRDDLGym - an autogeneration tool for gym environments from RDDL textual description.

More information about the infrastructure, how to use it and how to add user defined domains can be found the following short guide

pyRDDLGym also comes with a set of auxiliary utils and baseline methods:

We provide a sample of RDDL domains here and include a list of the eight Final Competition Domains further below. We still encourange the community to contribute user defined domains, or ideas you think the community should be aware of. While it will not make it into the current competition it will help enrich the the problem database, and be mature enough to be included in future competition.

In addition to the original domain, we have recreated some of the classical control domains in RDDL. Illustrating how easy it is to generate domains in pyRDDLSim:

Note, that there are additional domains out there from past competitions (IPPC 2011, IPPC 2014), which can be also be used with pyRDDLSim:

Past competitions were entirely discrete; the focus of this year’s competition is on continuous and mixed discrete-continuous problems. However, everybody are welcome to take advantage of their existance. All previous competition domains are avilavble through the rddlrepository package/git.

Registration for the competition is now closed.

A record number of groups have registered, we are expecting an amazing competition.

All competitors must submit a (maximum) 2 page abstract + unlimited references, describing their method. We encourage the authors to release a public github link to their planner in their abstract. If this github link is not provided in the abstract, it will be required by the final competition submission since the results of the competition winners will need to be independently verified by the competition organizers. Please format submissions in AAAI style (see instructions in the Author Kit), please use the camera ready version with the names and affiliations.

All abstracts must comply with the following requirements:

Abstract submission, due May 1, 2023.

An important requirement for IPC 2023 competitors is to give the organizers the right to post their paper and the source code of their learners/planners on the official IPC 2023 web site, and the source code of submitted planners must be released under a license allowing free non-commercial use.

Revised abstract submission, due June 13, 2023.

As a conclusion from the initial submission, we emphesize that all abstracts must comply with the instructions above. Think of the abstract as a short 2 pages + references paper presenting your method. For your convenience we have created an example for an abstract, you can use it as template if you wish - Latex | Word.

The competition will include:

| Domain | pyRDDLGym name |

|---|---|

| Race Car | RaceCar |

| Reservoir Control | Reservoir_continuous |

| Recommender Systems | RecSim |

| HVAC | HVAC |

| UAV | UAV_continuous |

| Power Generation | PowerGen_continuous |

| Mountain Car | MountainCar |

| Mars Rover | MarsRover |

Instance generators are available for all of these domains. The competition instances will be generated with these generators. Documentation of the generators is available here:

A mandatory stage for all competitors is to participate in a dry run of the competition. This stage will test both the competition infrastructure and procedure and the competitors side to prevent problems and misunderstandings at competition time.

The dry run will take place on April 17th, during which a 24 hour window will be open for submission of the competing method. Each method will be executed on two domains, and two instances each. The domains for the dry-run will be HVAC and RaceCar.

The submission must include the ID of the competing team, unique permanent name of the competing method, and links to the github repository (optional) and dockerhub of the method image (mendatory). For technical instructions and further explanation please see

Note that the competition infrastructure will use the singularity infrastructure, and thus all dockers must use the template and follow the instruction on the link above to assure compatibility between the docker image and the competition infrastructure on our HPC.

We expect that your planner is capable of generating non-noop (potentially random) legal actions. The docker image and trace logs will be inspected to ensure that.

Dry-run submission, due April 17, 2023.

The competition week will take place Monday-Thursday June 5 - June 8 2023. Starting June 5th 8:00 AM EDT, 3 instances will be release for all 8 domains. Competitors will have the 4 days to tune their methods for the 3 instances. At the end of the four days a docker image of maximum 2GB, with the tuned method will be submitted. All submissions must be finalized by June 8th 8:00 PM EDT. Please make sure your submission is based on the template in the demo NoOp docker example.

Competition submission, due June 8th, 8 PM EDT 2023.

The competition version of pyRDDLGym, containing the competition instances is available at the pyRDDLGym git repository under the branch IPPC2023:

For the 4 days of the competition you will have access to 3 of the 5 evaluation instances through the IPPC2023 branch. The instances will be indexed 1,3,5 while 2,4 are hidden and will be published only after the submission deadline has passed. The instances are generally designed to be in increasing order of difficulty, with 1 being the easiest and 5 the hardest. The competition instances have unique names: ‘instance#c.rddl’ (# stands for 1,3,5) at the pyRDDLGym examples tree. The instances are accesible through the ExampleManager object as all other pyRDDLGym examples. The following is an example how to access instance 1 of the competition of the ‘HVAC’ domain:

from pyRDDLGym import RDDLEnv

EnvInfo = ExampleManager.GetEnvInfo('HVAC')

myEnv = RDDLEnv.RDDLEnv(domain=EnvInfo.get_domain(), instance=EnvInfo.get_instance('1c'))

For your convinience, we have added the file InstGenExample.py which contains generation code, and the parameters to each of the 3 competition instances.

The file is availalbe at the root folder of pyRDDLGym (with the GymExample.py file). Note that only the version in the IPPC2023 branch contains the competition parameters.

Competitors should self-report training specifications (how many machines and machine specifications).

Manual encoding of explicity domain and instance knowledge is prohibited, you are however allowed to use domain specific heuristics and other automatic domain specific methods, as long as you report them.

Remark: we will use the same five instances at evaluation time in this edition of the competition in order to facilitate reinforcement learning competitors who may need to learn per-instance.

An updated abstract containining any domain knowledge decleration, and the training specification must be submitted by June 13th, 2023

Revised abstract submission, due June 13, 2023.

After June 8, 2023, competitor container submissions will be evaluated using an 8-core CPU (no GPU) with 32Gb of RAM, on 50 randomized trials for each instance of the 8 competition domains. The evaluation instances for each domain will include the 3 pre-released instances, with additional 5 new never seen before instances - total of 5 instances per domain.

The average over all 50 trails will be taken as the raw score for each instance.

Normalized [0,1] instance scores will be computed according to the following lower and upper bounds:

A planner that does worse than 0 on this normalized scale will receive a 0. Each instance will have an hour (60 minutes) for general initilization, and then each trial will have 4 minutes time limit; total of 60 + 50*4 = 260 minutes will be allocated per instance, with independent timers for each stage (you cannot use 200 minutes for initilaization and then do all the 50 episdoes in 60 minutes). failure to execute any trial (e.g., crash) for an instance or exceeding the time limit in any trial for an instance will lead to overall normalized score of 0 for that instance. No competitor can exceed a score of 1, by definition. Note that scoring less then 0 will result in rounding to zero (adversarial acting).

Normalized domain scores will be computed as an average of normalized instance scores.

An overall competition score will be computed as an average of normalized domain scores.

The results were presented at the 33rd International Conference on Automated Planning and Scheduling (ICAPS) on July 11 in Prague. The presentation slides of this talk contain additional details.

The winners:

Contact us: ippc2023-rddl@googlegroups.com